In recent years, there has been a significant shift in how artificial intelligence (AI) processes data. Traditionally, AI computations were handled in centralized data centers, requiring data to be transmitted to powerful cloud servers for processing before sending results back to user devices. While this model has enabled significant advancements in AI applications, it has also introduced several limitations, including increased latency, concerns about data privacy, and heavy reliance on continuous internet connectivity. To address these challenges, a new trend known as “Edge AI” has emerged, where AI computations are performed directly on local devices such as smartphones, personal computers, IoT devices, and sensors.

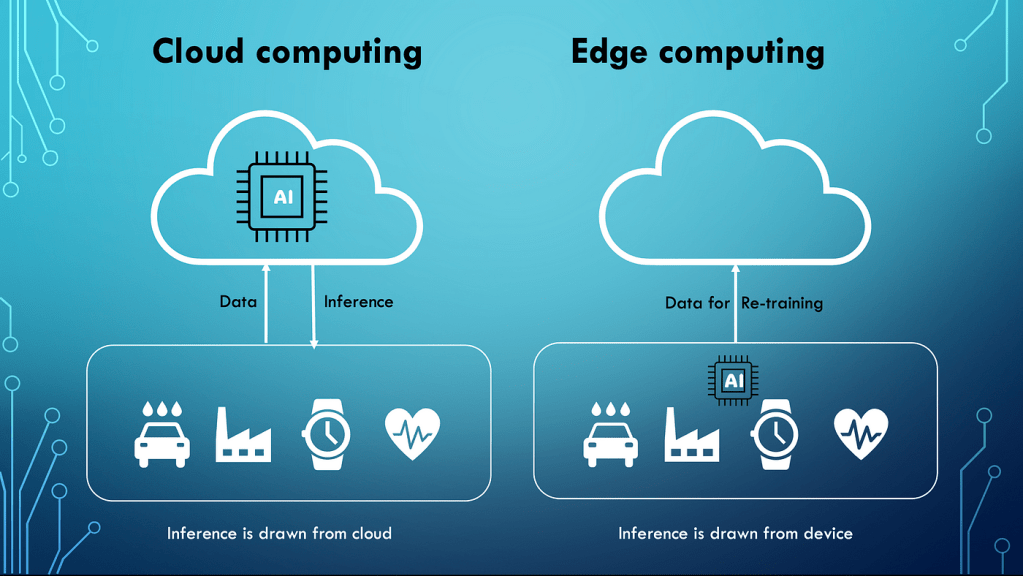

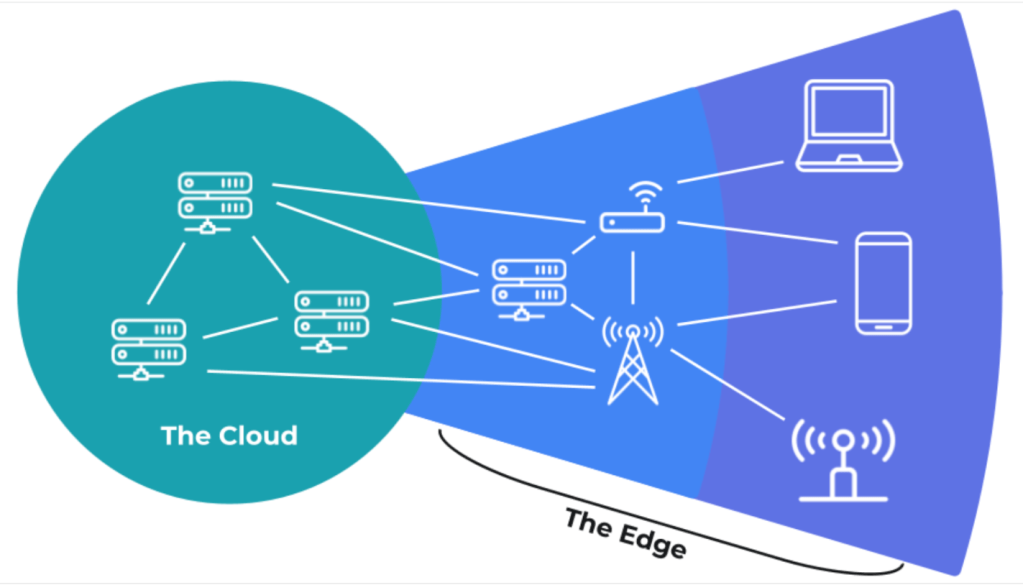

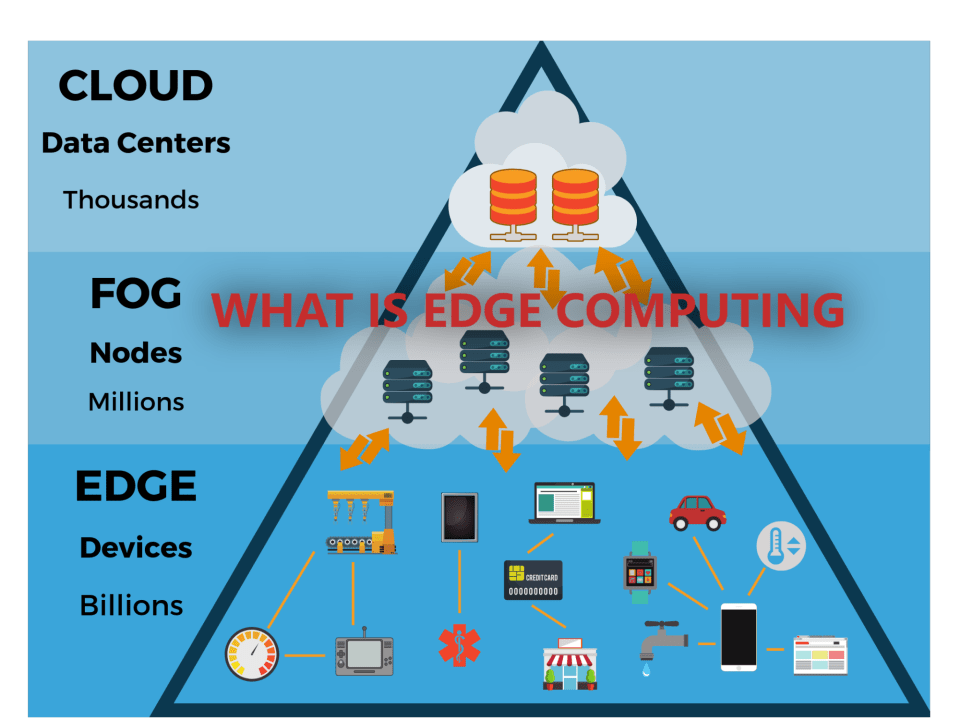

Edge AI represents a paradigm shift in AI architecture by decentralizing data processing and bringing intelligence closer to the source of data collection. Unlike cloud-based AI, which relies on remote data centers for computation, Edge AI processes information on-device, allowing for real-time analysis and decision-making without the need to send large volumes of data back and forth over a network. This transition has been driven by advancements in hardware, particularly in specialized AI chips like Google’s Edge TPU and Apple’s Neural Engine, which enable sophisticated AI computations to be executed efficiently on smaller, less power-intensive devices.

The Evolution of Edge AI

The transition from cloud-based AI to Edge AI is being accelerated by the rapid evolution of AI hardware, improvements in semiconductor technology, and the increasing adoption of edge computing frameworks. As industries continue to embrace Edge AI for its efficiency, privacy, and real-time processing capabilities, the future of AI will likely be defined by a hybrid model that combines the strengths of both cloud and edge computing. This hybrid approach will enable AI applications to leverage cloud computing for large-scale model training while deploying lightweight, optimized models on edge devices for real-time inference and decision-making. Edge AI represents a fundamental shift in how AI is deployed and utilized, making intelligent computing more decentralized, efficient, and widely accessible. As technology continues to advance, Edge AI will play a crucial role in shaping the next generation of AI-driven applications across various sectors, from healthcare and automotive to smart cities and consumer electronics.

Artificial intelligence has undergone a significant transformation in recent years, particularly in how data is processed and utilized. Traditionally, AI applications relied heavily on cloud computing, a model often referred to as cloud AI. In this setup, data generated by user devices—whether from smartphones, IoT sensors, or industrial machines—is transmitted to centralized cloud data centers, where AI models process the information and return the results. This cloud-centric approach has been instrumental in enabling sophisticated AI applications, as it allows for the use of high-performance computing resources, large-scale data storage, and powerful deep-learning algorithms. However, as AI has become more integrated into everyday life and mission-critical applications, the limitations of cloud-based AI have become more apparent, leading to the rise of Edge AI.

One of the biggest challenges of cloud AI is latency, or the time delay between sending data to the cloud, processing it, and receiving the results. For many applications, particularly those that require real-time decision-making, even milliseconds of delay can have serious consequences. In autonomous vehicles, for example, any lag in processing road conditions, detecting obstacles, or making split-second navigation decisions can be the difference between safety and disaster. Similarly, in industrial automation, where robots rely on AI-powered sensors to perform precise tasks, delays in processing sensor data can lead to inefficiencies, production errors, or safety hazards. Cloud AI, despite its immense computational power, struggles with real-time responsiveness, making it unsuitable for time-sensitive applications.

Another key concern with cloud AI is bandwidth usage and the cost associated with transferring large amounts of data. Modern AI applications, particularly those involving high-resolution video analysis, real-time surveillance, or large-scale IoT networks, generate massive data streams that need to be processed continuously. When all this data is sent to the cloud, it puts a strain on network bandwidth and increases operational costs. In cases where connectivity is unstable or limited—such as in remote agricultural areas, offshore energy installations, or disaster response zones—relying on cloud AI becomes impractical. The need to reduce dependency on constant internet connectivity has driven industries toward Edge AI, where processing happens directly on local devices, reducing network congestion and lowering data transmission costs.

Beyond performance issues, privacy and security concerns have also fueled the shift from cloud AI to Edge AI. Cloud computing requires data to be transmitted and stored on remote servers, which raises questions about data sovereignty, compliance with regulations, and protection against cyber threats. Sensitive information—whether it’s medical records from wearable health devices, financial transactions from mobile banking, or personal data from smart home devices—faces increased exposure when sent to the cloud. Privacy regulations like the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) have placed strict requirements on how companies handle user data, making Edge AI an attractive alternative. By processing data locally on user devices, Edge AI ensures that personal information never leaves the device, reducing the risks of data breaches, unauthorized access, and regulatory violations.

Edge AI fundamentally reshapes the AI ecosystem by bringing computation closer to the data source. Instead of relying on centralized cloud servers, AI models are deployed directly on edge devices, including smartphones, embedded systems, and industrial IoT sensors. This shift decentralizes AI processing, allowing for greater autonomy and localized decision-making. In healthcare, for example, Edge AI enables wearable devices to analyze heart rate variability, detect early signs of cardiovascular issues, and alert users without needing to transmit sensitive data to a remote server. In retail, smart cameras powered by Edge AI can process customer foot traffic, detect suspicious behavior, and enhance security in real-time, all while minimizing data exposure. In smart cities, Edge AI is being used for intelligent traffic management, where cameras and sensors analyze congestion patterns and adjust traffic signals without relying on cloud processing.

Another domain where Edge AI is making an impact is predictive maintenance in industrial automation. Traditionally, industrial equipment would rely on cloud-based AI systems to analyze performance data and predict when machinery might fail. However, this method often involved delays and bandwidth costs. Edge AI enables machines to process data locally, detect anomalies in real-time, and prevent failures before they occur. This not only improves operational efficiency but also reduces downtime and maintenance costs. Despite its advantages, the transition from cloud AI to Edge AI does not mean that cloud computing is becoming obsolete. Instead, a hybrid approach is emerging, where cloud AI and Edge AI work together to optimize performance. The cloud is still essential for training large AI models, storing historical data, and performing complex computations that require vast amounts of resources. Edge AI, on the other hand, is better suited for real-time inference and localized processing. This hybrid model allows organizations to balance computational efficiency, cost, and security, leveraging cloud AI for large-scale model training while deploying lighter, optimized models at the edge for real-time applications.

As Edge AI continues to evolve, advancements in hardware and software are accelerating its adoption. Chip manufacturers such as NVIDIA, Qualcomm, and Apple have developed specialized AI processors that enable efficient, low-power computations on edge devices. New AI frameworks, including Google’s TensorFlow Lite and Facebook’s PyTorch Mobile, are making it easier for developers to build and deploy AI models optimized for edge environments. Research in model compression techniques, such as pruning, quantization, and federated learning, is further improving the efficiency of AI models, allowing them to run smoothly on resource-constrained devices.

The shift from cloud-based AI to Edge AI marks a significant evolution in AI architecture, driven by the need for lower latency, reduced bandwidth usage, improved privacy, and real-time decision-making. As AI applications become more embedded in daily life—powering everything from smart assistants and industrial automation to autonomous vehicles and healthcare monitoring—the ability to process data closer to the source will become increasingly important. Edge AI is not just a technological advancement; it is a strategic shift that will shape the future of AI, enabling smarter, faster, and more secure applications across diverse industries.

Advantages of Edge AI

- Reduced Latency: Processing data locally eliminates the time it takes to send data to the cloud and wait for a response, resulting in faster decision-making. This is crucial for applications like autonomous driving, where split-second decisions are necessary.

- Enhanced Privacy: Keeping data on-device means sensitive information doesn’t need to be transmitted over the internet, reducing the risk of data breaches and unauthorized access. For instance, health data collected by wearable devices can be analyzed locally, ensuring personal information remains private.

- Bandwidth Efficiency: By processing data at the edge, the amount of data sent to central servers is minimized, leading to reduced bandwidth usage and associated costs. This is particularly beneficial in remote areas with limited connectivity.

- Reliability: Edge AI systems can continue to operate even when there’s limited or no internet connectivity, ensuring consistent performance in critical applications. For example, industrial machines equipped with Edge AI can monitor and respond to issues in real-time without relying on cloud connectivity.

Challenges in Implementing Edge AI

While Edge AI offers numerous benefits, it also presents several challenges:

- Resource Constraints: Edge devices often have limited processing power, memory, and storage compared to centralized data centers. Developing AI models that can operate efficiently within these constraints requires innovative approaches in model design and optimization.

- Security and Privacy: While processing data locally can enhance privacy, it also necessitates robust security measures to protect data on the device. Ensuring data integrity and preventing unauthorized access are critical concerns.

- Scalability: Deploying and managing AI models across a vast number of edge devices can be complex. Ensuring consistent performance and updates across diverse hardware and software environments requires effective management strategies.

- Data Management: Handling data across numerous devices introduces challenges in data synchronization, storage, and processing. Developing efficient data management strategies is essential for effective Edge AI deployment.

Advancements Paving the Way for Edge AI

Recent developments in hardware and software are making Edge AI more feasible:

- Specialized Hardware: Companies are developing chips specifically designed for AI processing on edge devices. For example, Google’s Edge TPU is an application-specific integrated circuit (ASIC) designed to run machine learning models on edge devices efficiently.

- Efficient Algorithms: Researchers are creating AI models that require less computational power, making them suitable for edge devices. Techniques like model quantization and pruning help reduce the size and complexity of models without significantly compromising performance.

- Software Frameworks: New frameworks are emerging to support the development and deployment of AI models on edge devices. These frameworks simplify the process of optimizing and running AI models on various hardware platforms.

Real-World Applications of Edge AI

Edge AI is transforming various industries by enabling intelligent processing closer to the data source:

Healthcare: Wearable devices equipped with Edge AI can monitor vital signs in real-time, alerting users and healthcare providers to potential health issues promptly. For instance, smartwatches can detect irregular heart rhythms and notify users to seek medical attention.

Manufacturing: In industrial settings, Edge AI enables predictive maintenance by analyzing data from machinery to predict failures before they occur, reducing downtime and maintenance costs. Smart cameras with Edge AI can also detect defects on production lines in real-time, ensuring quality control.

Autonomous Vehicles: Self-driving cars rely on Edge AI to process data from sensors and cameras in real-time, allowing them to navigate safely without depending on cloud-based computations.

Smart Cities: Edge AI powers various applications in smart cities, such as traffic management systems that analyze data from road sensors to optimize traffic flow and reduce congestion.

Retail: Retailers use Edge AI for inventory management, analyzing data from shelf sensors to monitor stock levels and automatically reorder products when necessary.

Conclusion: The Future of AI at the Edge

The rise of Edge AI marks a pivotal shift in the evolution of artificial intelligence, bringing intelligence closer to the source of data generation and fundamentally transforming how AI operates across industries. As businesses, researchers, and technology leaders continue to push the boundaries of AI capabilities, the transition from cloud-based AI to Edge AI represents a move toward faster, more efficient, and privacy-conscious computing. By enabling real-time decision-making, reducing reliance on internet connectivity, and enhancing data security, Edge AI is setting the stage for a new era of smart devices and intelligent automation. Whether in healthcare, automotive, manufacturing, or smart cities, the applications of Edge AI are vast and growing, unlocking new opportunities for innovation. While challenges such as hardware limitations, security concerns, and scalability need to be addressed, advancements in AI model optimization, specialized hardware, and hybrid AI frameworks will continue to drive Edge AI adoption. Ultimately, the future of AI will not be confined to massive data centers but will be distributed across millions of interconnected devices, creating a world where intelligence is embedded seamlessly into everyday technology, making our interactions with AI more intuitive, efficient, and secure.

Dr Mukesh Jain is a Gold Medallist engineer in Electronics and Telecommunication Engineering from MANIT Bhopal. He obtained his MBA from the prestigious management institute, the Indian Institute of Management Ahmedabad. He obtained his Master of Public Administration from the Kennedy School of Government, Harvard University along with Edward Mason Fellowship. He had the unique distinction of receiving three distinguished awards at Harvard University: The Mason Fellow award and The Lucius N. Littauer Fellow award for exemplary academic achievement, public service & potential for future leadership. He was also awarded The Raymond & Josephine Vernon award for academic distinction & significant contribution to Mason Fellowship Program. Mukesh Jain received his PhD in Strategic Management from IIT Delhi. His focus of research has been Capacity building of organizations using Positive psychology interventions, Growth mindset and Lateral Thinking etc.

Mukesh Jain joined the Indian Police Service in 1989, Madhya Pradesh cadre. As an IPS officer, he held many challenging assignments including the Superintendent of Police, Raisen and Mandsaur Districts, and Inspector General of Police, Criminal Investigation Department and Additional DGP Cybercrime, Transport Commissioner Madhya Pradesh and Special DG Police. He has also served as Joint Secretary in Ministry of Power and Ministry of Social Justice and Empowerment, Government of India. As Joint Secretary, Department of Persons with Disabilities, he conceptualized and implemented the ‘Accessible India Campaign’, launched by Hon’ble Prime Minister Shri Narendra Modi in December 2015. This campaign is aimed at creating accessibility in physical infrastructure, Transportation, and IT sectors for persons with disabilities and continues to be a flagship program of the Ministry of Social Justice and Empowerment, Government of India since 2015.

Dr. Mukesh Jain has authored many books on Public Policy and Positive Psychology. His book, ‘Excellence in Government, is a recommended reading for many public policy courses. A leading publisher published his book- “A Happier You: Strategies to achieve peak joy in work and life using science of Happiness”, which received book of the year award in 2022. His other books are : ‘Mindset for Success and Happiness’, ‘Seeds of Happiness’, and ‘What they don’t teach you at IITs and IIMs’.

He is a visiting faculty to many business schools and reputed training institutes. He is an expert trainer of “The Science of happiness”. He has conducted more than 250 workshops on the Science of Happiness at many prominent B-schools and administrative training institutes of India, including Indian School of Business Hyderabad/ Mohali, National Police Academy, IIFM, National Productivity Council etc.